Overview

Emerge's performance analysis allows you to generate a controlled, statistically sound profile of your app on real, physical devices.

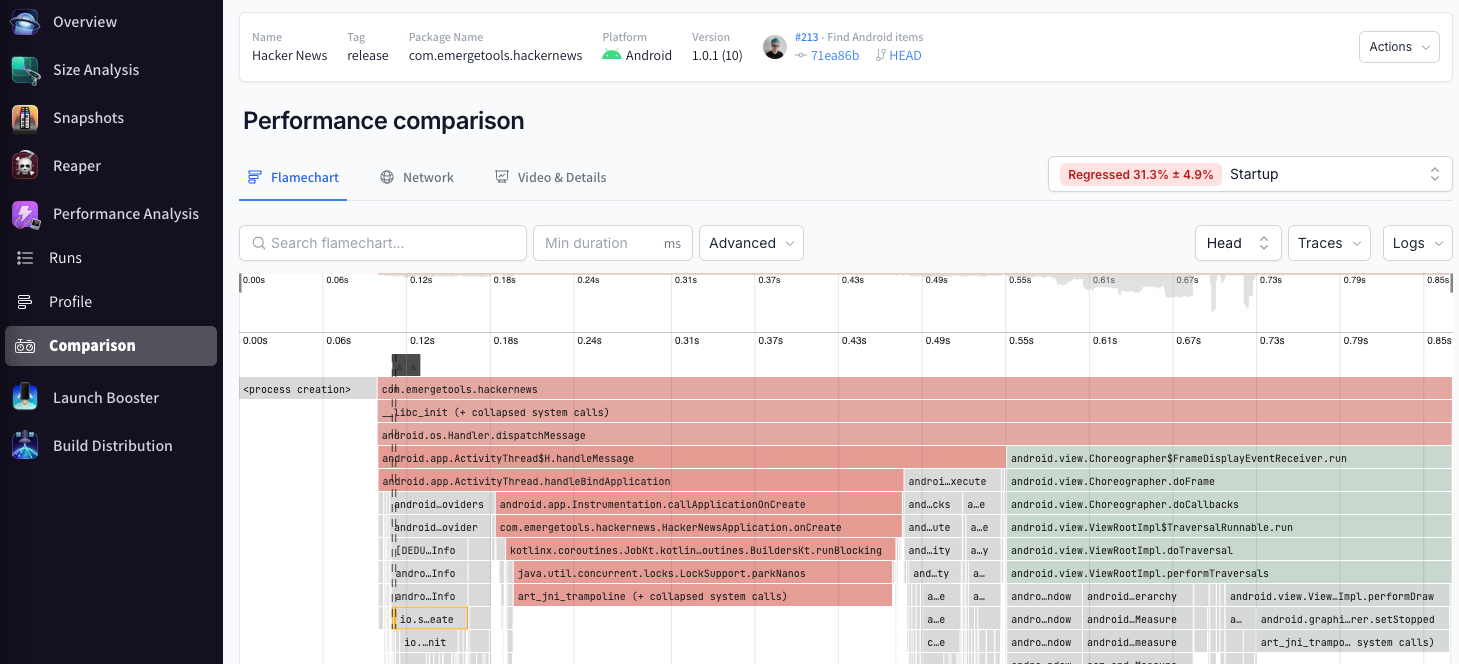

Emerge can also compare performance profiles between two builds of your app, giving you a statistically sound conclusion about key metrics like startup time, and if those metrics have regressed, improved, or remained the same.

Why Emerge Performance Analysis?

While other tools exist for performance profiling on both iOS and Android, none offer the full end-to-end suite that Emerge does. Emerge just requires you to upload a build (or two builds for a performance comparison). From there, Emerge handles the hard parts for you, like:

- Simple Gradle plugin & Fastlane integration points

- Device management

- Test orchestration

- Variance control (see Variance control for full details)

- Result handling and posting to common integrations, like Slack, GitHub, and more.

All this combines to give you a simple and easy-to-understand performance analysis of your app.

iOS Performance Analysis

Emerge supports testing the performance of any iOS flow using XCUITests.

Read the full iOS Performance Analysis documentation.

Android Performance Analysis

By default, Emerge profiles Android apps from the process start to the first frame.

Additionally, Emerge can generate performance analyses for any custom flow using UIAutomator tests.

Read the full Android Performance analysis documentation.

FAQ

Updated 11 months ago